從零開始:深度學習軟件環境安裝指南(Ubuntu)本文gitHub地址:https://github.com/philferriere/dlwin

該配置版本最后更新的日期是今年七月,該更新版本允許本地使用 3 個不同的 gpu 加速后端,并添加對 mkl blas 庫的支持。

目前有很多幫助我們在 Linux 或 Mac OS 上構建深度學習(DL)環境的指導文章,但很少有文章完整地敘述如何高效地在 Windows 10 上配置深度學習開發環境。此外,很多開發者安裝 Windows 和 Ubuntu 雙系統或在 Windows 上安裝虛擬機以配置深度學習環境,但對于入門者來說,我們更希望還是直接使用 Windows 直接配置深度學習環境。因此,本文作者 Phil Ferriere 在 github 上發布了該教程,他希望能從最基本的環境變量配置開始一步步搭建 keras 深度學習開發環境。

如果讀者希望在 Windows 10 上配置深度學習環境,那么本文將為大家提供很多有利的信息。

01 依賴項

下面是我們將在 Windows 10(Version 1607 OS Build 14393.222)上配置深度學習環境所需要的工具和軟件包:

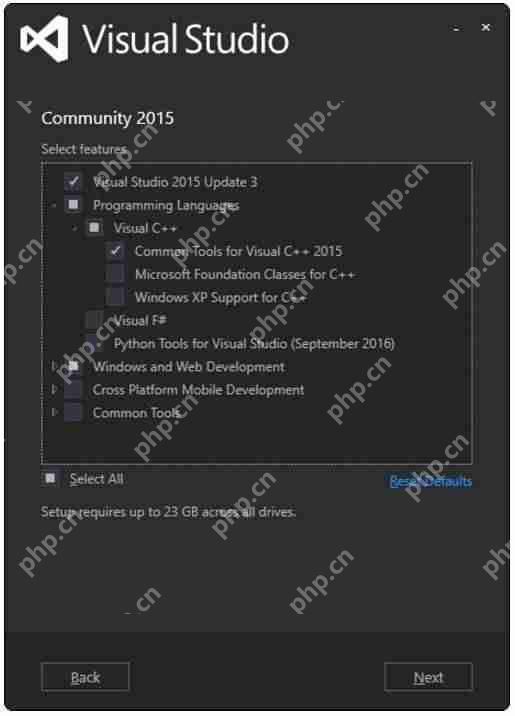

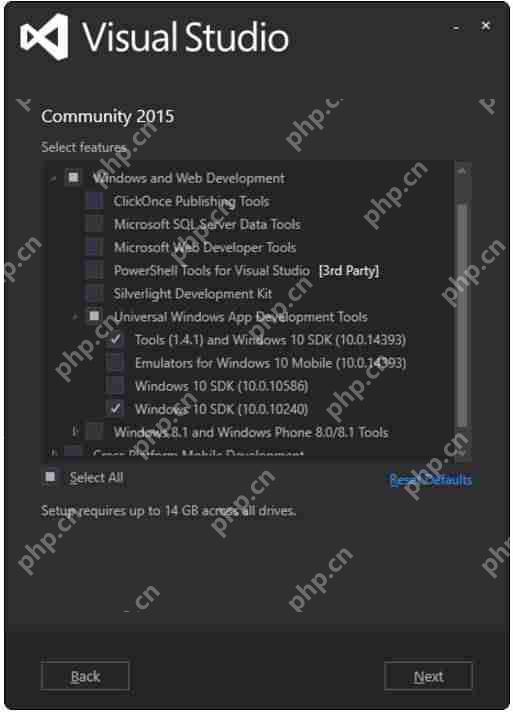

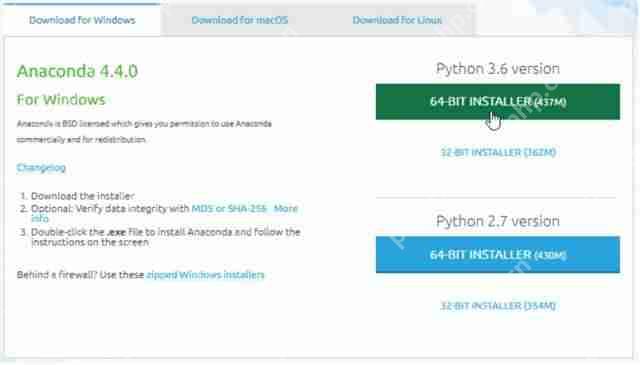

visual studio 2015 Community Edition Update 3 w. Windows Kit 10.0.10240.0:用于其 C/c++編譯器(而不是 ide)和 SDK,選擇該確定的版本是因為 CUDA 8.0.61 所支持的 Windows 編譯器。Anaconda (64-bit) w. Python 3.6 (Anaconda3-4.4.0) [for tensorflow support] or Python 2.7 (Anaconda2-4.4.0) [no Tensorflow support] with MKL:Anaconda 是一個開源的 Python 發行版本,其包含了 conda、Python、numpy、scipy 等 180 多個科學包及其依賴項,是一個集成開發環境。MKL 可以利用 CPU 加速許多線性代數運算。CUDA 8.0.61 (64-bit):CUDA 是一種由 NVIDIA 推出的通用并行計算架構,該架構使 GPU 能夠解決復雜的計算問題,該軟件包能提供 GPU 數學庫、顯卡驅動和 CUDA 編譯器等。cudnn v5.1 (Jan 20, 2017) for CUDA 8.0:用于加速卷積神經網絡的運算。Keras 2.0.5 with three different backends: Theano 0.9.0, Tensorflow-gpu 1.2.0, and CNTK 2.0:Keras 以 Theano、Tensorflow 或 CNTK 等框架為后端,并提供深度學習高級 API。使用不同的后端在張量數學計算等方面會有不同的效果。

02 硬件

Dell Precision T7900, 64GB RAM:Intel Xeon E5-2630 v4 @ 2.20 GHz (1 processor, 10 cores total, 20 logical processors)NVIDIA GeForce Titan X, 12GB RAM:Driver version: 372.90 / Win 10 64

03 安裝步驟

我們可能喜歡讓所有的工具包和軟件包在一個根目錄下(如 e:toolkits.win),所以在下文只要看到以 e:toolkits.win 開頭的路徑,那么我們可能就需要小心不要覆蓋或隨意更改必要的軟件包目錄。

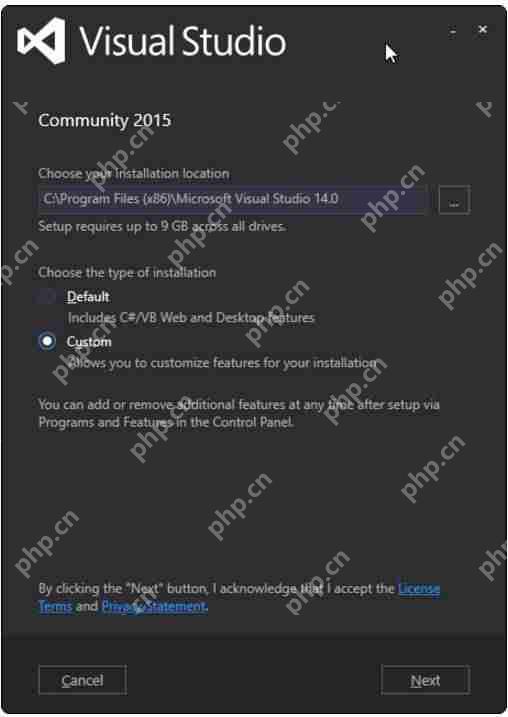

Visual Studio 2015 Community Edition Update 3 w. Windows Kit 10.0.10240.0下載地址:https://www.visualstudio.com/vs/older-downloads

運行下載的軟件包以安裝 Visual Studio,可能我們還需要做一些額外的配置:

基于我們安裝 VS 2015 的地址,需要將 C:Program Files (x86)microsoft Visual Studio 14.0VCbin 添加到 PATH 中。定義系統環境變量(sysenv variable)include 的值為 C:Program Files (x86)Windows Kits10Include10.0.10240.0ucrt定義系統環境變量(sysenv variable)LIB 的值為 C:Program Files (x86)Windows Kits10Lib10.0.10240.0umx64;C:Program Files (x86)Windows Kits10Lib10.0.10240.0ucrtx64

Anaconda 4.4.0 (64-bit) (Python 3.6 TF support / Python 2.7 no TF support))

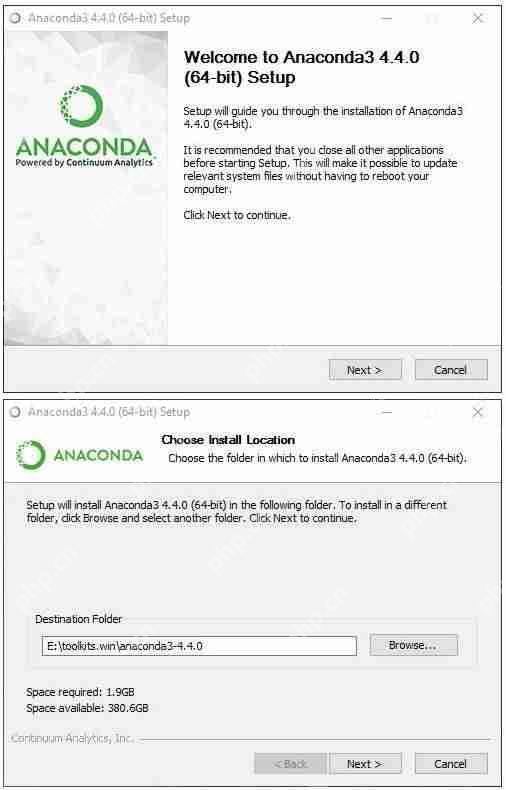

本教程最初使用的是 Python 2.7,而隨著 TensorFlow 可作為 Keras 的后端,我們決定使用 Python 3.6 作為默認配置。因此,根據我們配置的偏好,可以設置 e:toolkits.winanaconda3-4.4.0 或 e:toolkits.winanaconda2-4.4.0 為安裝 Anaconda 的文件夾名。

Python 3.6 版本的 Anaconda 下載地址:https://repo.continuum.io/archive/Anaconda3-4.4.0-Windows-x86_64.exePython 2.7 版本的 Anaconda 下載地址:https://repo.continuum.io/archive/Anaconda2-4.4.0-Windows-x86_64.exe

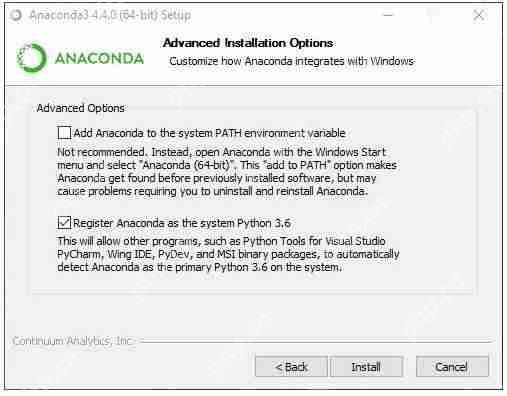

運行安裝程序完成安裝:

如上,本教程選擇了第二個選項,但不一定是最好的。

定義一下變量并更新 PATH:

定義系統環境(sysenv variable)變量 PYTHON_HOME 的值為 e:toolkits.winanaconda3-4.4.0添加 %PYTHON_HOME%, %PYTHON_HOME%Scripts 和 %PYTHON_HOME%Librarybin 到 PATH 中

創建 dlwin36 conda 環境

在安裝 Anaconda 后,打開 Windows 命令窗口并執行:

#使用以下命令行創建環境$ conda create –yes -n dlwin36 numpy scipy mkl-service m2w64-toolchain libpython jupyter# 使用以下命令行激活環境:# > activate dlwin36## 使用以下命令行關閉環境:# > deactivate dlwin36## * for power-users using bash, you must source#

如上所示,使用 active dlwin36 命令激活這個新的環境。如果已經有了舊的 dlwin36 環境,可以先用 conda env remove -n dlwin36 命令刪除。既然打算使用 GPU,為什么還要安裝 CPU 優化的線性代數庫如 MKL 呢?在我們的設置中,大多數深度學習都是由 GPU 承擔的,這并沒錯,但 CPU 也不是無所事事。基于圖像的 Kaggle 競賽一個重要部分是數據增強。如此看來,數據增強是通過轉換原始訓練樣本(利用圖像處理算子)獲得額外輸入樣本(即更多的訓練圖像)的過程。基本的轉換比如下采樣和均值歸 0 的歸一化也是必需的。如果你覺得這樣太冒險,可以試試額外的預處理增強(噪聲消除、直方圖均化等等)。當然也可以用 GPU 處理并把結果保存到文件中。然而在實踐過程中,這些計算通常都是在 CPU 上平行執行的,而 GPU 正忙于學習深度神經網絡的權重,況且增強數據是用完即棄的。因此,我們強烈推薦安裝 MKL,而 Theanos 用 BLAS 庫更好。

CUDA 8.0.61 (64-bit)

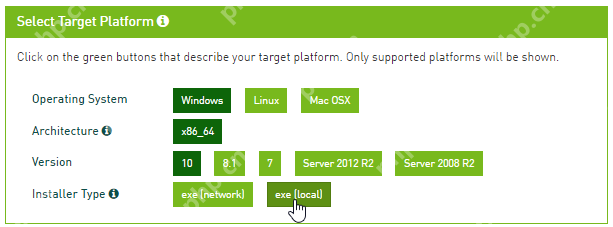

從英偉達網站下載 CUDA 8.0 (64-bit):https://developer.nvidia.com/cuda-downloads

選擇合適的操作系統:

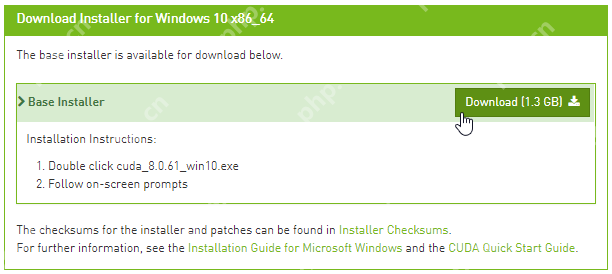

下載安裝包:

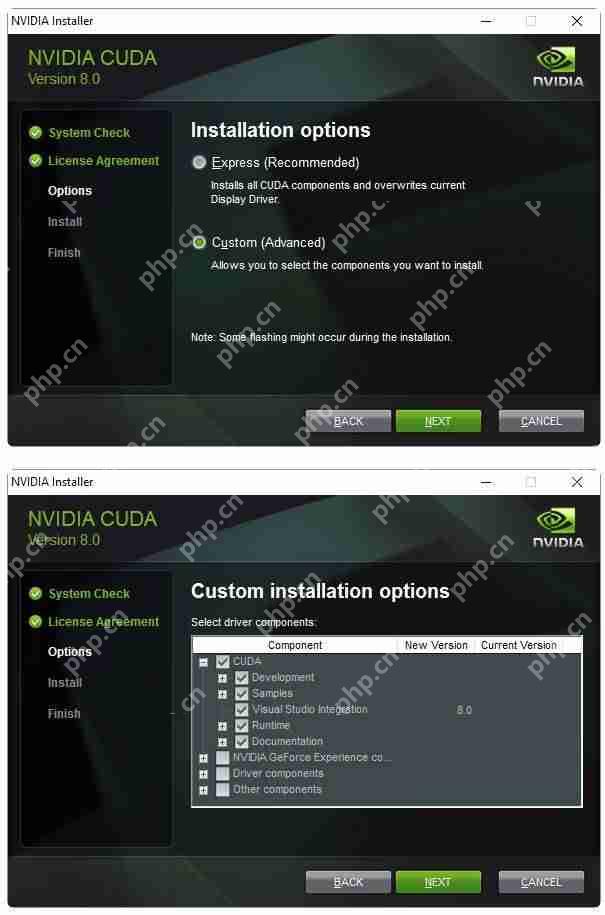

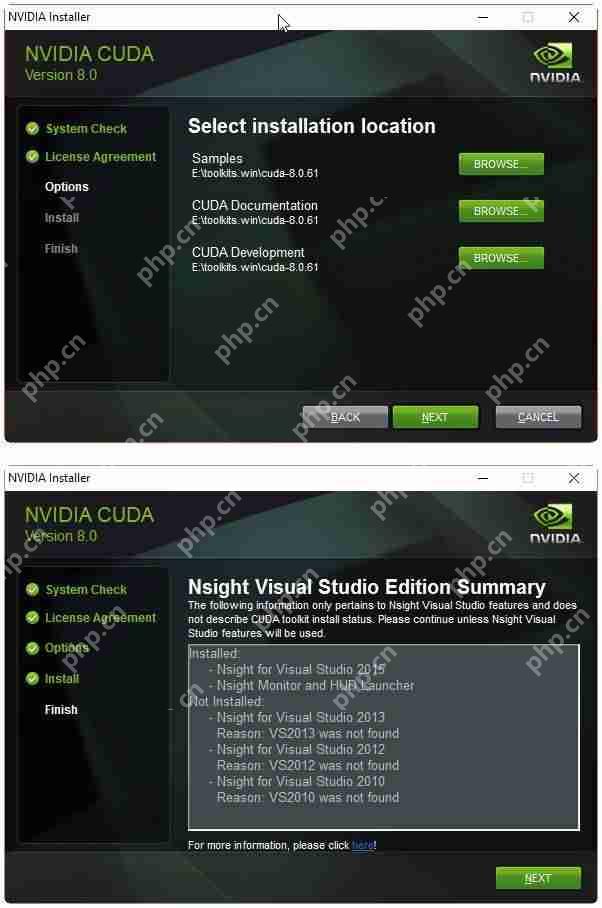

運行安裝包,安裝文件到 e:toolkits.wincuda-8.0.61 中:

完成安裝后,安裝包應該創建了一個名為 CUDA_PATH 的系統環境變量(sysenv variable),并且已經添加了%CUDA_PATH%bin 和 %CUDA_PATH%libnvvp 到 PATH 中。檢查是否真正添加了,若 CUDA 環境變量因為一些原因出錯了,那么完成下面兩個步驟:

定義名為 CUDA_PATH 的系統環境變量的值為 e:toolkits.wincuda-8.0.61添加%CUDA_PATH%bin 和 %CUDA_PATH%libnvvp 到 PATH 中

cuDNN v5.1 (Jan 20, 2017) for CUDA 8.0

根據英偉達官網「cuDNN 為標準的運算如前向和反向卷積、池化、歸一化和激活層等提供高度調優的實現」,它是為卷積神經網絡和深度學習設計的一款加速方案。

cuDNN 的下載地址:https://developer.nvidia.com/rdp/cudnn-download

我們需要選擇符合 CUDA 版本和 Window 10 編譯器的 cuDNN 軟件包,一般來說,cuDNN 5.1 可以支持 CUDA 8.0 和 Windows 10。

下載的 ZIP 文件包含三個目錄(bin、include、lib),抽取這三個的文件夾到%CUDA_PATH% 中。

安裝 Keras 2.0.5 和 Theano0.9.0 與 libgpuarray

運行以下命令安裝 libgpuarray 0.6.2,即 Theano 0.9.0 唯一的穩定版:

(dlwin36) $ conda install pygpu==0.6.2 nose#下面是該命令行安裝的效果Fetching package metadata ………..Solving package specifications: .Package plan for installation in environment e:toolkits.winanaconda3-4.4.0envsdlwin36:The following NEW packages will be INSTALLED: libgpuarray: 0.6.2-vc14_0 [vc14] nose: 1.3.7-py36_1 pygpu: 0.6.2-py36_0Proceed ([y]/n)? y

輸入以下命令安裝 Keras 和 Theano:

(dlwin36) $ pip install keras==2.0.5#下面是該命令行安裝的效果Collecting keras==2.0.5Requirement already satisfied: six in e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packages (from keras==2.0.5)Collecting pyyaml (from keras==2.0.5)Collecting theano (from keras==2.0.5)Requirement already satisfied: scipy>=0.14 in e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packages (from theano->keras==2.0.5)Requirement already satisfied: numpy>=1.9.1 in e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packages (from theano->keras==2.0.5)Installing collected packages: pyyaml, theano, kerasSuccessfully installed keras-2.0.5 pyyaml-3.12 theano-0.9.0

安裝 CNTK 2.0 后端

根據 CNTK 安裝文檔,我們可以使用以下 pip 命令行安裝 CNTK:

(dlwin36) $ pip install https://cntk.ai/PythonWheel/GPU/cntk-2.0-cp36-cp36m-win_amd64.whl#下面是該命令行安裝的效果Collecting cntk==2.0 from https://cntk.ai/PythonWheel/GPU/cntk-2.0-cp36-cp36m-win_amd64.whl Using cached https://cntk.ai/PythonWheel/GPU/cntk-2.0-cp36-cp36m-win_amd64.whlRequirement already satisfied: numpy>=1.11 in e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packages (from cntk==2.0)Requirement already satisfied: scipy>=0.17 in e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packages (from cntk==2.0)Installing collected packages: cntkSuccessfully installed cntk-2.0

該安裝將導致在 conda 環境目錄下額外安裝 CUDA 和 cuDNN DLLs:

(dlwin36) $ cd E:toolkits.winanaconda3-4.4.0envsdlwin36(dlwin36) $ dir cu*.dll#下面是該命令行安裝的效果Volume in drive E is datasetsVolume Serial number is 1ED0-657BDirectory of E:toolkits.winanaconda3-4.4.0envsdlwin3606/30/2017 02:47 PM 40,744,896 cublas64_80.dll06/30/2017 02:47 PM 366,016 cudart64_80.dll06/30/2017 02:47 PM 78,389,760 cudnn64_5.dll06/30/2017 02:47 PM 47,985,208 curand64_80.dll06/30/2017 02:47 PM 41,780,280 cusparse64_80.dll 5 File(s) 209,266,160 bytes 0 Dir(s) 400,471,019,520 bytes free

這個問題并不是因為浪費硬盤空間,而是安裝的 cuDNN 版本和我們安裝在 c:toolkitscuda-8.0.61 下的 cuDNN 版本不同,因為在 conda 環境目錄下的 DLL 將首先加載,所以我們需要這些 DLL 移除出%PATH% 目錄:

(dlwin36) $ md discard & move cu*.dll discard#下面是該命令行安裝的效果E:toolkits.winanaconda3-4.4.0envsdlwin36cublas64_80.dllE:toolkits.winanaconda3-4.4.0envsdlwin36cudart64_80.dllE:toolkits.winanaconda3-4.4.0envsdlwin36cudnn64_5.dllE:toolkits.winanaconda3-4.4.0envsdlwin36curand64_80.dllE:toolkits.winanaconda3-4.4.0envsdlwin36cusparse64_80.dll 5 file(s) moved.

安裝 TensorFlow-GPU 1.2.0 后端

運行以下命令行使用 pip 安裝 TensorFlow:

(dlwin36) $ pip install tensorflow-gpu==1.2.0#以下是安裝效果Collecting tensorflow-gpu==1.2.0 Using cached tensorflow_gpu-1.2.0-cp36-cp36m-win_amd64.whlRequirement already satisfied: bleach==1.5.0 in e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packages (from tensorflow-gpu==1.2.0)Requirement already satisfied: numpy>=1.11.0 in e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packages (from tensorflow-gpu==1.2.0)Collecting markdown==2.2.0 (from tensorflow-gpu==1.2.0)Requirement already satisfied: wheel>=0.26 in e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packages (from tensorflow-gpu==1.2.0)Collecting protobuf>=3.2.0 (from tensorflow-gpu==1.2.0)Collecting backports.weakref==1.0rc1 (from tensorflow-gpu==1.2.0) Using cached backports.weakref-1.0rc1-py3-none-any.whlCollecting html5lib==0.9999999 (from tensorflow-gpu==1.2.0)Collecting werkzeug>=0.11.10 (from tensorflow-gpu==1.2.0) Using cached Werkzeug-0.12.2-py2.py3-none-any.whlRequirement already satisfied: six>=1.10.0 in e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packages (from tensorflow-gpu==1.2.0)Requirement already satisfied: setuptools in e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packagessetuptools-27.2.0-py3.6.egg (from protobuf>=3.2.0->tensorflow-gpu==1.2.0)Installing collected packages: markdown, protobuf, backports.weakref, html5lib, werkzeug, tensorflow-gpu Found existing installation: html5lib 0.999 DEPRECATION: Uninstalling a distutils installed project (html5lib) has been deprecated and will be removed in a future version. this is due to the fact that uninstalling a distutils project will only partially uninstall the project. Uninstalling html5lib-0.999: Successfully uninstalled html5lib-0.999Successfully installed backports.weakref-1.0rc1 html5lib-0.9999999 markdown-2.2.0 protobuf-3.3.0 tensorflow-gpu-1.2.0 werkzeug-0.12.2

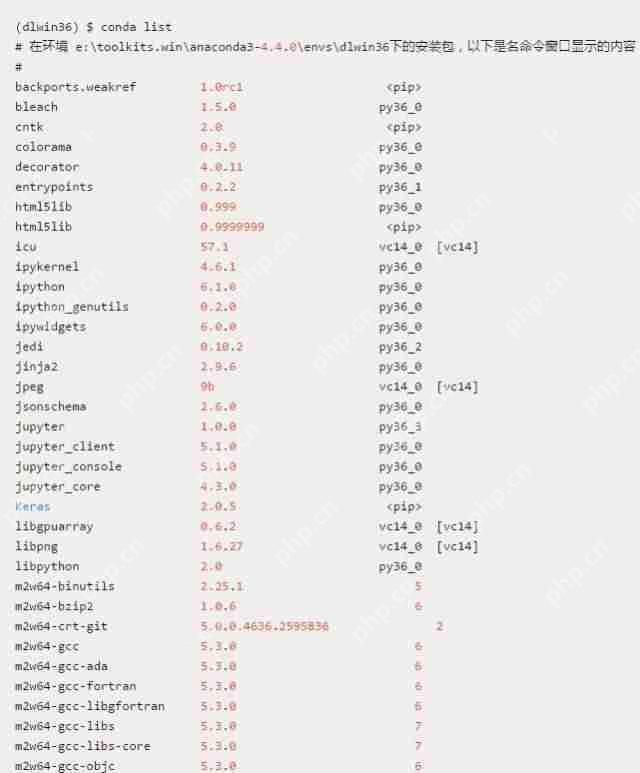

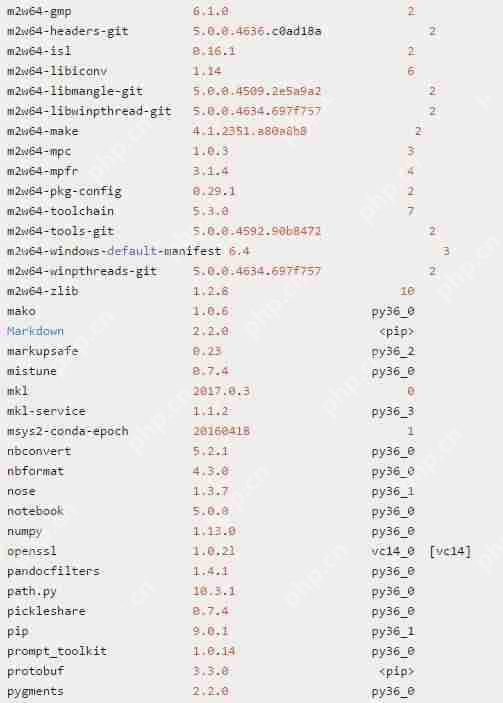

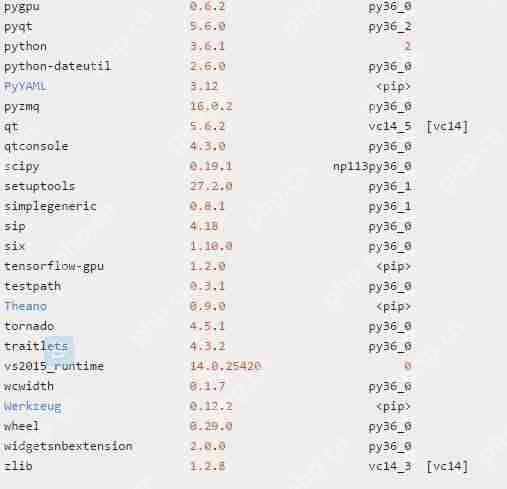

使用 conda 檢查安裝的軟件包

完成以上安裝和配置后,我們應該在 dlwin36 conda 環境中看到以下軟件包列表:

為了快速檢查上述三個后端安裝的效果,依次運行一下命令行分別檢查 Theano、TensorFlow 和 CNTK 導入情況:

(dlwin36) $ python -c “import theano; print(‘theano: %s, %s’ % (theano.__version__, theano.__file__))”theano: 0.9.0, E:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packagestheano__init__.py(dlwin36) $ python -c “import pygpu; print(‘pygpu: %s, %s’ % (pygpu.__version__, pygpu.__file__))”pygpu: 0.6.2, e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packagespygpu__init__.py(dlwin36) $ python -c “import tensorflow; print(‘tensorflow: %s, %s’ % (tensorflow.__version__, tensorflow.__file__))”tensorflow: 1.2.0, E:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packagestensorflow__init__.py(dlwin36) $ python -c “import cntk; print(‘cntk: %s, %s’ % (cntk.__version__, cntk.__file__))”cntk: 2.0, E:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packagescntk__init__.py

驗證 Theano 的安裝

因為 Theano 是安裝 Keras 時自動安裝的,為了快速地在 CPU 模式、GPU 模式和帶 cuDNN 的 GPU 模式之間轉換,我們需要創建以下三個系統環境變量(sysenv variable):

系統環境變量 THEANO_FLAGS_CPU 的值定義為:floatX=float32,device=cpu系統環境變量 THEANO_FLAGS_GPU 的值定義為:floatX=float32,device=cuda0,dnn.enabled=False,gpuarray.preallocate=0.8系統環境變量 THEANO_FLAGS_GPU_DNN 的值定義為:floatX=float32,device=cuda0,optimizer_including=cudnn,gpuarray.preallocate=0.8,dnn.conv.algo_bwd_filter=deterministic,dnn.conv.algo_bwd_data=deterministic,dnn.include_path=e:/toolkits.win/cuda-8.0.61/include,dnn.library_path=e:/toolkits.win/cuda-8.0.61/lib/x64

現在,我們能直接使用 THEANO_FLAGS_CPU、THEANO_FLAGS_GPU 或 THEANO_FLAGS_GPU_DNN 直接設置 Theano 使用 CPU、GPU 還是 GPU+cuDNN。我們可以使用以下命令行驗證這些變量是否成功加入環境中:

(dlwin36) $ set KERAS_BACKEND=theano(dlwin36) $ set | findstr /i theanoKERAS_BACKEND=theanoTHEANO_FLAGS=floatX=float32,device=cuda0,optimizer_including=cudnn,gpuarray.preallocate=0.8,dnn.conv.algo_bwd_filter=deterministic,dnn.conv.algo_bwd_data=deterministic,dnn.include_path=e:/toolkits.win/cuda-8.0.61/include,dnn.library_path=e:/toolkits.win/cuda-8.0.61/lib/x64THEANO_FLAGS_CPU=floatX=float32,device=cpuTHEANO_FLAGS_GPU=floatX=float32,device=cuda0,dnn.enabled=False,gpuarray.preallocate=0.8THEANO_FLAGS_GPU_DNN=floatX=float32,device=cuda0,optimizer_including=cudnn,gpuarray.preallocate=0.8,dnn.conv.algo_bwd_filter=deterministic,dnn.conv.algo_bwd_data=deterministic,dnn.include_path=e:/toolkits.win/cuda-8.0.61/include,dnn.library_path=e:/toolkits.win/cuda-8.0.61/lib/x64

更多具體的 Theano 驗證代碼與命令請查看原文。

檢查系統環境變量

現在,不論 dlwin36 conda 環境什么時候激活,PATH 環境變量應該需要看起來如下面列表一樣:

使用 Keras 驗證 GPU+cuDNN 的安裝

我們可以使用 Keras 在 MNIST 數據集上訓練簡單的卷積神經網絡(convnet)而驗證 GPU 的 cuDNN 是否正確安裝,該文件名為 mnist_cnn.py,其可以在 Keras 案例中找到。該卷積神經網絡的代碼如下:

Keras案例地址:https://github.com/fchollet/keras/blob/2.0.5/examples/mnist_cnn.py

(dlwin36) $ set KERAS_BACKEND=cntk(dlwin36) $ python mnist_cnn.pyUsing CNTK backendSelected GPU[0] GeForce GTX TITAN X as the process wide default device.x_train shape: (60000, 28, 28, 1)60000 train samples10000 test samplesTrain on 60000 samples, validate on 10000 samplesEpoch 1/12e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packagescntkcore.py:351: UserWarning: your data is of type “float64”, but your input variable (uid “input113”) expects “

1. 使用帶 Theano 后端的 Keras

為了有一個能進行對比的基線模型,首先我們使用 Theano 后端和 CPU 訓練簡單的卷積神經網絡:

(dlwin36) $ set KERAS_BACKEND=theano(dlwin36) $ set THEANO_FLAGS=%THEANO_FLAGS_CPU%(dlwin36) $ python mnist_cnn.py#以下為訓練過程和結果Using Theano backend.x_train shape: (60000, 28, 28, 1)60000 train samples10000 test samplesTrain on 60000 samples, validate on 10000 samplesEpoch 1/1260000/60000 [==============================] – 233s – loss: 0.3344 – acc: 0.8972 – val_loss: 0.0743 – val_acc: 0.9777Epoch 2/1260000/60000 [==============================] – 234s – loss: 0.1106 – acc: 0.9674 – val_loss: 0.0504 – val_acc: 0.9837Epoch 3/1260000/60000 [==============================] – 237s – loss: 0.0865 – acc: 0.9741 – val_loss: 0.0402 – val_acc: 0.9865Epoch 4/1260000/60000 [==============================] – 238s – loss: 0.0692 – acc: 0.9792 – val_loss: 0.0362 – val_acc: 0.9874Epoch 5/1260000/60000 [==============================] – 241s – loss: 0.0614 – acc: 0.9821 – val_loss: 0.0370 – val_acc: 0.9879Epoch 6/1260000/60000 [==============================] – 245s – loss: 0.0547 – acc: 0.9839 – val_loss: 0.0319 – val_acc: 0.9885Epoch 7/1260000/60000 [==============================] – 248s – loss: 0.0517 – acc: 0.9840 – val_loss: 0.0293 – val_acc: 0.9900Epoch 8/1260000/60000 [==============================] – 256s – loss: 0.0465 – acc: 0.9863 – val_loss: 0.0294 – val_acc: 0.9905Epoch 9/1260000/60000 [==============================] – 264s – loss: 0.0422 – acc: 0.9870 – val_loss: 0.0276 – val_acc: 0.9902Epoch 10/1260000/60000 [==============================] – 263s – loss: 0.0423 – acc: 0.9875 – val_loss: 0.0287 – val_acc: 0.9902Epoch 11/1260000/60000 [==============================] – 262s – loss: 0.0389 – acc: 0.9884 – val_loss: 0.0291 – val_acc: 0.9898Epoch 12/1260000/60000 [==============================] – 270s – loss: 0.0377 – acc: 0.9885 – val_loss: 0.0272 – val_acc: 0.9910Test loss: 0.0271551907005Test accuracy: 0.991

我們現在使用以下命令行利用帶 Theano 的后端的 Keras 在 GPU 和 cuDNN 環境下訓練卷積神經網絡:

(dlwin36) $ set THEANO_FLAGS=%THEANO_FLAGS_GPU_DNN%(dlwin36) $ python mnist_cnn.pyUsing Theano backend.Using cuDNN version 5110 on context NonePreallocating 9830/12288 Mb (0.800000) on cuda0Mapped name None to device cuda0: GeForce GTX TITAN X (0000:03:00.0)x_train shape: (60000, 28, 28, 1)60000 train samples10000 test samplesTrain on 60000 samples, validate on 10000 samplesEpoch 1/1260000/60000 [==============================] – 17s – loss: 0.3219 – acc: 0.9003 – val_loss: 0.0774 – val_acc: 0.9743Epoch 2/1260000/60000 [==============================] – 16s – loss: 0.1108 – acc: 0.9674 – val_loss: 0.0536 – val_acc: 0.9822Epoch 3/1260000/60000 [==============================] – 16s – loss: 0.0832 – acc: 0.9766 – val_loss: 0.0434 – val_acc: 0.9862Epoch 4/1260000/60000 [==============================] – 16s – loss: 0.0694 – acc: 0.9795 – val_loss: 0.0382 – val_acc: 0.9876Epoch 5/1260000/60000 [==============================] – 16s – loss: 0.0605 – acc: 0.9819 – val_loss: 0.0353 – val_acc: 0.9884Epoch 6/1260000/60000 [==============================] – 16s – loss: 0.0533 – acc: 0.9836 – val_loss: 0.0360 – val_acc: 0.9883Epoch 7/1260000/60000 [==============================] – 16s – loss: 0.0482 – acc: 0.9859 – val_loss: 0.0305 – val_acc: 0.9897Epoch 8/1260000/60000 [==============================] – 16s – loss: 0.0452 – acc: 0.9865 – val_loss: 0.0295 – val_acc: 0.9911Epoch 9/1260000/60000 [==============================] – 16s – loss: 0.0414 – acc: 0.9878 – val_loss: 0.0315 – val_acc: 0.9898Epoch 10/1260000/60000 [==============================] – 16s – loss: 0.0386 – acc: 0.9886 – val_loss: 0.0282 – val_acc: 0.9911Epoch 11/1260000/60000 [==============================] – 16s – loss: 0.0378 – acc: 0.9887 – val_loss: 0.0306 – val_acc: 0.9904Epoch 12/1260000/60000 [==============================] – 16s – loss: 0.0354 – acc: 0.9893 – val_loss: 0.0296 – val_acc: 0.9898Test loss: 0.0296215178292Test accuracy: 0.9898

我們看到每一個 Epoch 的訓練時間只需要 16 秒,相對于使用 CPU 要 250 秒左右取得了很大的提高(在同一個批量大小的情況下)。

2. 使用 TensorFlow 后端的 Keras

為了激活和測試 TensorFlow 后端,我們需要使用以下命令行:

(dlwin36) $ set KERAS_BACKEND=tensorflow(dlwin36) $ python mnist_cnn.pyUsing TensorFlow backend.x_train shape: (60000, 28, 28, 1)60000 train samples10000 test samplesTrain on 60000 samples, validate on 10000 samplesEpoch 1/122017-06-30 12:49:22.005585: W c:tf_jenkinshomeworkspacerelease-winmwindows-gpupy36tensorflowcoreplatformcpu_feature_guard.cc:45] The TensorFlow library wasn’t compiled to use SSE instructions, but these are available on your machine and could speed up CPU computations.2017-06-30 12:49:22.005767: W c:tf_jenkinshomeworkspacerelease-winmwindows-gpupy36tensorflowcoreplatformcpu_feature_guard.cc:45] The TensorFlow library wasn’t compiled to use SSE2 instructions, but these are available on your machine and could speed up CPU computations.2017-06-30 12:49:22.005996: W c:tf_jenkinshomeworkspacerelease-winmwindows-gpupy36tensorflowcoreplatformcpu_feature_guard.cc:45] The TensorFlow library wasn’t compiled to use SSE3 instructions, but these are available on your machine and could speed up CPU computations.2017-06-30 12:49:22.006181: W c:tf_jenkinshomeworkspacerelease-winmwindows-gpupy36tensorflowcoreplatformcpu_feature_guard.cc:45] The TensorFlow library wasn’t compiled to use SSE4.1 instructions, but these are available on your machine and could speed up CPU computations.2017-06-30 12:49:22.006361: W c:tf_jenkinshomeworkspacerelease-winmwindows-gpupy36tensorflowcoreplatformcpu_feature_guard.cc:45] The TensorFlow library wasn’t compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations.2017-06-30 12:49:22.006539: W c:tf_jenkinshomeworkspacerelease-winmwindows-gpupy36tensorflowcoreplatformcpu_feature_guard.cc:45] The TensorFlow library wasn’t compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations.2017-06-30 12:49:22.006717: W c:tf_jenkinshomeworkspacerelease-winmwindows-gpupy36tensorflowcoreplatformcpu_feature_guard.cc:45] The TensorFlow library wasn’t compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.2017-06-30 12:49:22.006897: W c:tf_jenkinshomeworkspacerelease-winmwindows-gpupy36tensorflowcoreplatformcpu_feature_guard.cc:45] The TensorFlow library wasn’t compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.2017-06-30 12:49:22.453483: I c:tf_jenkinshomeworkspacerelease-winmwindows-gpupy36tensorflowcorecommon_runtimegpugpu_device.cc:940] Found device 0 with properties:name: GeForce GTX TITAN Xmajor: 5 minor: 2 memoryClockRate (GHz) 1.076pciBusID 0000:03:00.0Total memory: 12.00GiBFree memory: 10.06GiB2017-06-30 12:49:22.454375: I c:tf_jenkinshomeworkspacerelease-winmwindows-gpupy36tensorflowcorecommon_runtimegpugpu_device.cc:961] DMA: 02017-06-30 12:49:22.454489: I c:tf_jenkinshomeworkspacerelease-winmwindows-gpupy36tensorflowcorecommon_runtimegpugpu_device.cc:971] 0: Y2017-06-30 12:49:22.454624: I c:tf_jenkinshomeworkspacerelease-winmwindows-gpupy36tensorflowcorecommon_runtimegpugpu_device.cc:1030] Creating TensorFlow device (/gpu:0) -> (device: 0, name: GeForce GTX TITAN X, pci bus id: 0000:03:00.0)60000/60000 [==============================] – 8s – loss: 0.3355 – acc: 0.8979 – val_loss: 0.0749 – val_acc: 0.9760Epoch 2/1260000/60000 [==============================] – 5s – loss: 0.1134 – acc: 0.9667 – val_loss: 0.0521 – val_acc: 0.9825Epoch 3/1260000/60000 [==============================] – 5s – loss: 0.0863 – acc: 0.9745 – val_loss: 0.0436 – val_acc: 0.9854Epoch 4/1260000/60000 [==============================] – 5s – loss: 0.0722 – acc: 0.9787 – val_loss: 0.0381 – val_acc: 0.9872Epoch 5/1260000/60000 [==============================] – 5s – loss: 0.0636 – acc: 0.9811 – val_loss: 0.0339 – val_acc: 0.9880Epoch 6/1260000/60000 [==============================] – 5s – loss: 0.0552 – acc: 0.9838 – val_loss: 0.0328 – val_acc: 0.9888Epoch 7/1260000/60000 [==============================] – 5s – loss: 0.0515 – acc: 0.9851 – val_loss: 0.0318 – val_acc: 0.9893Epoch 8/1260000/60000 [==============================] – 5s – loss: 0.0479 – acc: 0.9862 – val_loss: 0.0311 – val_acc: 0.9891Epoch 9/1260000/60000 [==============================] – 5s – loss: 0.0441 – acc: 0.9870 – val_loss: 0.0310 – val_acc: 0.9898Epoch 10/1260000/60000 [==============================] – 5s – loss: 0.0407 – acc: 0.9871 – val_loss: 0.0302 – val_acc: 0.9903Epoch 11/1260000/60000 [==============================] – 5s – loss: 0.0405 – acc: 0.9877 – val_loss: 0.0309 – val_acc: 0.9892Epoch 12/1260000/60000 [==============================] – 5s – loss: 0.0373 – acc: 0.9886 – val_loss: 0.0309 – val_acc: 0.9898Test loss: 0.0308696583555Test accuracy: 0.9898

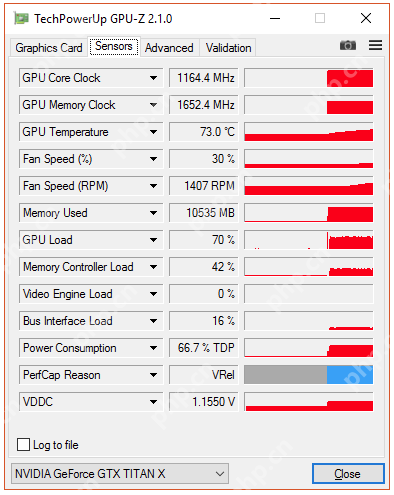

我們看到使用 TensorFlow 后端要比 Theano 后端在該任務上快 3 倍左右,它們都是用了 GPU 和 cuDNN 加速。這可能是因為在該測試中它們有相同的通道等級(channel ordering),但實際上兩個平臺在這一點是不一樣的。因此,程序可能強制 Theano 后端重新排序數據而造成性能上的差異。但在該案例下,TensorFlow 在 GPU 上的負載一直沒有超過 70%。

3. 使用 CNTK 后端的 Keras

為了激活和測試 CNTK 后算,我們需要使用以下命令行:

(dlwin36) $ set KERAS_BACKEND=cntk(dlwin36) $ python mnist_cnn.pyUsing CNTK backendSelected GPU[0] GeForce GTX TITAN X as the process wide default device.x_train shape: (60000, 28, 28, 1)60000 train samples10000 test samplesTrain on 60000 samples, validate on 10000 samplesEpoch 1/12e:toolkits.winanaconda3-4.4.0envsdlwin36libsite-packagescntkcore.py:351: UserWarning: your data is of type “float64”, but your input variable (uid “Input113”) expects “

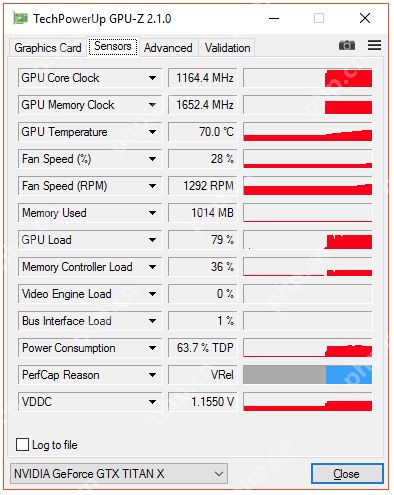

在具體的試驗中,CNTK 同樣也十分快速,并且 GPU 負載達到了 80%。

END

投稿和反饋請發郵件至hzzy@hzbook.com。轉載大數據公眾號文章,請向原文作者申請授權,否則產生的任何版權糾紛與大數據無關。